In a world where artificial intelligence (AI) is everywhere, from productivity tools to virtual assistants, IT professionals in small and medium-sized businesses (SMBs) face a delicate balance. On the one hand, AI drives efficiency and innovation; on the other, it opens the door to risks like deepfakes , data breaches, and the unauthorized use of AI tools, known as Shadow AI . Whether you manage IT for clients or make technical decisions internally, you know that strengthening network infrastructure isn't optional—it's essential.

This practical guide explores these challenges, inspired by recent discussions on AI and cybersecurity, such as those seen in specialized reports and market analyses. We'll simplify the topic, assess real risks, and show how solutions like Lumiun DNS can help protect your network simply and effectively. The focus here is on concrete actions for SMEs, where resources are limited but exposure to threats is high.

Understanding the Risks: AI as an Ally and a Threat

AI isn't just a buzzword; it's shaping the future of cybersecurity, but it's also creating new attack vectors. As highlighted in studies on technological innovation, such as those by CESAR in Brazil , the complexity of AI systems can surpass human capabilities, leading to an eternal "cat-and-mouse game" between defenders and attackers.

What is Shadow AI and why does it concern SMEs?

Shadow AI refers to the use of generative AI tools by employees without IT approval or oversight . Consider employees accessing ChatGPT or similar services via smartphones for daily tasks, entering sensitive company data. This can lead to data exfiltration (a method used by hackers to steal data from an IT system or network by exploiting the DNS protocol), where confidential information ends up on third-party servers.

- Common risks : Leakage of intellectual property, such as code or business strategies, especially if the data is used to train AI models.

- Impact on SMEs : Without dedicated IT teams, monitoring is more difficult. One study shows that 38% of employees share sensitive work information with AI tools without their employer's permission, increasing vulnerabilities. For example, startups in the e-commerce sector, such as small Brazilian online stores, have reported cases where employees used free AI tools to optimize product descriptions, but ended up exposing customer data to LGPD compliance risks, resulting in unexpected fines, as seen in cases of micro-enterprises fined by the ANPD for data processing non-compliance.

In hybrid work environments, blocking specific APIs or IPs is challenging, but ignoring this can result in costly breaches.

Deepfakes: The Visual and Psychological Threat

Deepfakes are AI-generated fake videos or audio files capable of imitating real voices and faces. In the cybersecurity context, they are used in fraud, such as phishing or disinformation campaigns.

- Real-world examples : Attacks involving a deepfake CEO requesting urgent transfers, or manipulating video calls to access systems. In Brazil, small fintechs saw an 822% increase in deepfake fraud between the first quarter of 2023 and 2024, with criminals using AI to impersonate executives and request fraudulent financial transfers. A notable case involved iGaming companies, where fraud on online betting platforms grew by more than 30% in Latin America in 2024, with deepfakes facilitating betting scams and affecting SMEs in the sector.

- Challenges for technical decision-makers : Detecting deepfakes requires advanced tools, but the first step is to prevent access to websites or services that facilitate their creation.

As mentioned in analyses of AI trends, these predictive pieces of content can act as ticking time bombs, leaking strategic information before it's even published.

Other hidden dangers: data poisoning and intellectual property leaks

In addition to Shadow AI and deepfakes, there's data poisoning, where attackers tamper with AI datasets to insert backdoors. This is like a "sleeper agent" in a science fiction movie: the system works fine until it's activated.

For developers at SMEs, inserting proprietary code into cloud-based AI tools increases the risk of intellectual property leaks. Reports indicate that vendors, even reputable ones, can be targets of attacks on their infrastructure. A practical example is that of Brazilian SMEs in the cryptocurrency sector that suffered leaks of sensitive data after the unauthorized use of AI tools, leading to breaches that damaged their market reputation, as highlighted in reports of silent threats from generic AIs causing millions in losses.

For developers in SMEs, inserting proprietary code into cloud-based AI tools increases the risk of intellectual property leaks. Reports show that even the most trusted vendors can fall victim to attacks on their infrastructure, and unauthorized use of AI, known as shadow AI, increases the average cost of breaches by R$591,000 . In Brazil, the average cost per data breach rose to R$7.19 million in 2025, a 6.5% increase compared to the previous year, with sectors such as finance facing even greater losses, reaching R$8.92 million per incident, affecting companies' reputation and market stability.

AI Risk FAQs

- What causes AI data leaks? Inserting sensitive information into generative tool prompts, which can be stored or exposed.

- Do deepfakes only affect large companies? No. SMEs are easy targets because they have fewer defenses, as seen in the cases of small Brazilian fintechs.

- How does Shadow AI differ from Shadow IT? Shadow AI is a subset focused on AI tools, but with increased risks due to data processing.

Assessing the security of your network infrastructure

Before implementing solutions, assess the current state. For IT professionals providing services or managing internally, start with a simple audit.

Steps for a quick assessment

- Map AI usage : Ask the team about tools they use (e.g., anonymous surveys). Identify unauthorized access.

- Check for network vulnerabilities : Use this tool to scan DNS traffic and identify suspicious AI-related domains.

- Analyze access logs : Look for patterns in data going out to generative AI services.

- Assess compliance : Ensure data policies comply with regulations such as the LGPD in Brazil.

For budget-strapped SMEs in Brazil, the game is to prioritize what most disrupts operations: phishing and social engineering at the top, ransomware close behind, and exposed services without patches or MFA, something that IT professionals or IT service providers should attack first with strong authentication, inventory, and disciplined updates. The ANPD offers a guide and checklist designed for small-scale actors, useful for defining security policies, access management, transaction logging, and contractual responsibilities with service providers. CERT.br statistics help calibrate the ongoing prioritization of phishing and exposed vulnerable services, keeping the focus on what appears most on the radar. In the financial sector, FEBRABAN points out that phishing and malvertising account for more than 75% of observed threats, so email controls, blocking malicious domains, and awareness-raising yield a high return per real invested. For Pix operators, the Central Bank publishes and updates the Security Manual with requirements such as API log retention, QR Code protection, and proper use of certificates, which must be monitored and applied by those managing the SME environment.

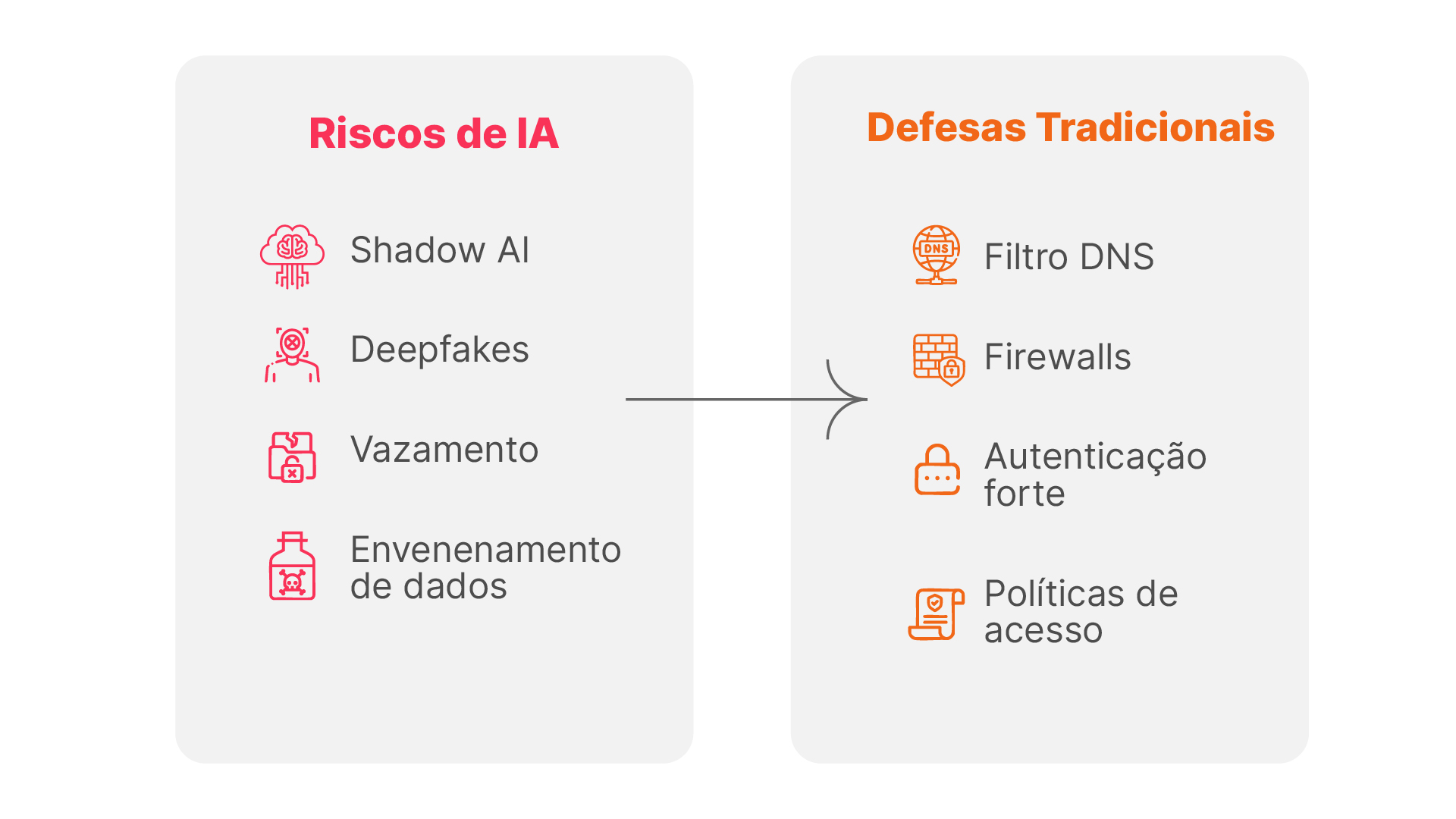

Strengthening the Network: Practical Solutions Focused on DNS

DNS protection tools are affordable and effective for SMBs. They operate at the network layer, filtering access before it reaches the end user.

Why DNS Filtering is Essential Against AI Risks

DNS filters block malicious or risky domains, preventing Shadow AI and deepfakes. They are easy to implement and require no additional hardware.

- Benefits for SMBs : Granular control without overloading IT. Allows exceptions to be released for approved tools.

- Workflow integration : For MSPs, it facilitates remote management of multiple clients.

A practical option is Lumiun DNS , which offers robust protection with a focus on content filtering. Its new "Security -> Artificial Intelligence" feature blocks access to generative AI services that may pose risks of data leaks or misuse. You can manually allow desired services from the customized list, maintaining the balance between innovation and security.

Practical Guide: Implementing Protection Against Shadow AI and Deepfakes

- Configure basic filtering : Enable blocks for risk categories, such as basic or advanced protection.

- Customize for AI : Use the specific filter for generative AI, adjusting exceptions (e.g., allow Gemini Pro and ChatGPT Plus).

- Monitor and adjust : Review weekly reports to refine rules.

- Educate your team : Share clear policies on AI usage, integrating with quick training sessions.

In real-world scenarios, such as those discussed in generative risk analysis, this reduces exposure without hindering productivity.

If you manage IT for clients, integrate DNS filters into service packages. Clients see immediate value in reports that show blocked threats, strengthening your partnership.

A safe future with AI

Navigating the challenges of AI, deepfakes, and Shadow AI doesn't have to be daunting. With a practical approach, assessing risks, implementing DNS filters, and educating teams, SMBs can turn AI into a positive force. Tools like Lumiun DNS simplify this process by offering precise control at the network layer.

Whether you're strengthening your infrastructure or helping customers, start small: evaluate today and implement tomorrow. The future of cybersecurity is collaborative, and affordable solutions are within reach.